Welcome to the Multimodal Interaction Lab, run by Professor Catherine Guastavino and her passionate students and postdocs at McGill University.

We are affiliated with the Centre for Interdisciplinary Research in Music Media and Technology and McGill University’s School of Information Studies. The lab was established in 2006 with funding from the Canadian Foundation for Innovation.

We investigate how people make sense of the world around them through different sensory modalities, with an emphasis on hearing. We conduct fundamental and applied research, both in natural settings and invirtual environments, to understand how to best convey information for multimodal interaction. Our team is very interdisciplinary and we collaborate with academics from a wide range of disciplines as well as industry partners, cultural institutions, and municipal and provincial governments.

Email: mil[dot]sis[at]mcgill[dot]ca

Main research areas

Cognitive science

Information science

Sound(scape)/noise

Built environment

The Sounds in the City partnership brings together researchers, artists, professionals who shape our cities, and citizens, to look at urban sound from a novel, resource-oriented perspective and nourish creative solutions to make cities sound better.

Sound plays a critical and complex role in the way we experience urban spaces. Today, cities treat urban sound as "noise", an isolated nuisance that should be mitigated when problems arise. But sound can also support our well-being, orientation, focus, and our lasting memories of urban spaces - even the city as a whole (e.g. music, conversation, bird chirping, water sounds).

Soundscape is a new user-centered proactive approach reframing sound as a resource, in relation to other urban design considerations, from early conception to long-term use. It does away with the implicit assumption that all environmental sounds are unwanted. While soundscape has become well established in research, implementation in practice remains scarce. Ultimately, every city user deserves to live, work, play and relax in sound environments they find appropriate. Realizing this goal involves accounting for "city users" perspectives, their activities, and the contexts in which sounds are experienced, all of which has implications for how "city makers" plan and design urban spaces. In the long run, we can improve the quality of life of residents and visitors who will benefit from a better-managed city, where sound has been considered as part of their experience.

Sounds in the City uses Montreal as a living laboratory for soundscape research in collaboration with a wide range of stakeholders from the private and public sectors, as well as the general public. Building on momentum to revisit noise policies in Canada and Quebec, we have assembled a cross-sectorial partnership to inform more equitable, sound-aware and healthier city-making.

To learn more about Sounds in the city, visit our website. You can also read up on our recent collaboration with the City of Montreal.

Funders: SSHRC, NSERC, MITACS, MSSS, MELCC, Ville de Montreal, Quartier des spectacles

The Sound in space research program tackles a fundamental function of the auditory system, namely the ability to track sounds as they move around us. Auditory motion perception is a major challenge to predict the path of moving objects and guide our action (e.g. avoid an approaching car or a buzzing mosquito).

This research program focuses on spatial hearing, specifically 1) auditory motion perception 2) reverberation perception, and 3) spatial audio rendering techniques to move sound in space. The findings and tools developed have been applied to enhance immersive experience in video gaming and music production in collaboration with industry partners, and for music creation by composers. Current studies focus on sounds spinning at very high velocities and multisensory motion perception.

Funders: FRQNT, NSERC, CFI, Sennheiser, Audiokinetic, Applied Acoustic systems

We collaborate with sound artists to shape sound environments through added sound using sound installations in public spaces in Montreal (with the artist collectives Audiotopie and Paysage) and in Paris (with Nicolas Misdariis from IRCAM and sound artist Nadine Schutz). We are developing a methodology for research creation collaboration between researchers and sound artists to characterize the existing sound environment, inform creation, simulate and evaluate different interventions in the lab and on site.

We also collaborate with Guillaume Boutard on Sound Art Documentation using spatial Audio and significant Knowledge (SAD_SASK project, https://boutard.ebsi.umontreal.ca/project-sound-art-documentation/) and more generally on the preservation of works of arts featuring technological components. Another line of research focuses on interactive sound installations in collaboration with Marcelo Wanderley (Fraisse et al, 2021).

Funders: SSHRC, NSERC

This research thread investigates how the brain combines multiple sources of sensory information derived from several modalities (vision, touch and audition) to form a coherent and robust percept. We conduct a combination of basic research on the perceptual and cognitive mechanisms at play (e.g. Frissen et al., 2023, Frissen & Guastavino, 2014) and applied research on how these findings can inform the design of multimodal interfaces (Absar & Guastavino, 2015, Yanaky et al., in press).

Other applications include multisensory comfort evaluation in airplanes (Gauthier et al, 2016), restaurants (Tarlao et al, 2021) and for cyclists (Ayachi et al., 2018).

Current projects focus on virtual reality simulation of outdoor environments (Yanaky et al., 2023, Tarlao et al, 2023) and digital music instruments (Sullivan et al, 2023).

Funders: NSERC, FRQSC, CFI

This line of research focuses on music perception and cognition, including the effect of hearing protection on music performance (Boissinot et al., 2022), rhythm perception in Flamenco music (Guastavino et al., 2009), and sound quality evaluations of musical instruments. (Saitis et al., 2017). Other directions include the design and evaluation of Digital Musical Instruments with a focus on user experience (Sullivan et al, 2022a,b), and tracking the creative process in music involving multiples agents and/or technological components (Pras et al., 2013a,b; Pras & Guastavino, 2013).

We are also part of the ACTOR Partnership (ANALYSIS, CREATION, and TEACHING of ORCHESTRATION https://www.actorproject.org/) which brings together artists, humanists, and scientists to propose new methods and tools for analyzing, creating and teaching orchestration.

Funders: SSHRC, NSERC

| Project | Applicants | Funding | Years |

|---|---|---|---|

| Virtual Acoustics Models for Physically-modeled Musical Instruments | C. Guastavino | NSERC, with Applied Acoustics Systems | 2015-2016 |

| Live Expression "in situ": Musical and Audiovisual Performance and Reception. | M. Wanderley, C. Guastavino and 8 others | CFI | 2015 |

| Spatialized and interactive artificial binaural reverberation. | C. Guastavino | NSERC, with Audiokinetic | 2014-2015 |

| Digital Musical Instrument Performance Research and Rapid Prototyping Laboratory (PR2P). | M. Wanderley, C. Guastavino and S. Ferguson | CFI | 2014-2019 |

| Upper limits of auditory motion perception. | C. Guastavino | NSERC | 2011-2016 |

| A global sustainable soundscape network. | B. Pijanowski and C. Guastavino | NSF | 2011-2016 |

| Physical characterization and perception of vibration transmission of road bike components. | Y. Champoux, C. Guastavino and 2 others | NSERC, with Cervelo | 2010-2013 |

| Vibroacoustics of large-scale, complex, multi-material structures in aerospace and terrestrial transport. | A. Berry, C. Guastavino and 8 others | CFI | 2009-2012 |

| Soundscapes of European Cities and Landscapes | J. Kang, C. Guastavino and others | Description | 2009-2012 |

| Soundfield rendering in aircraft cabins/cockpits. | A. Berry, C. Guastavino | NSERC, with Bombardier and CAE | 2008-2011 |

| Perception of Audio Quality. | I. Fujinaga, C. Guastavino and 3 others | FRQSC | 2008-2010 |

| Perception of rhythmic similarity in flamenco music. | C. Guastavino | CIRMMT | 2007-2008 |

| Haptics, Sound and Interaction in the Design of Enactive Interfaces. | M. Wanderley, C. Guastavino and 4 others | NSERC | 2006-2008 |

| Perceptual evaluation of human-computer interaction: Applications to the design of adaptive multimodal systems. | C. Guastavino | CFI | 2006-2011 |

| Auditory perception of space | C. Guastavino | FRQNT | 2006-2008 |

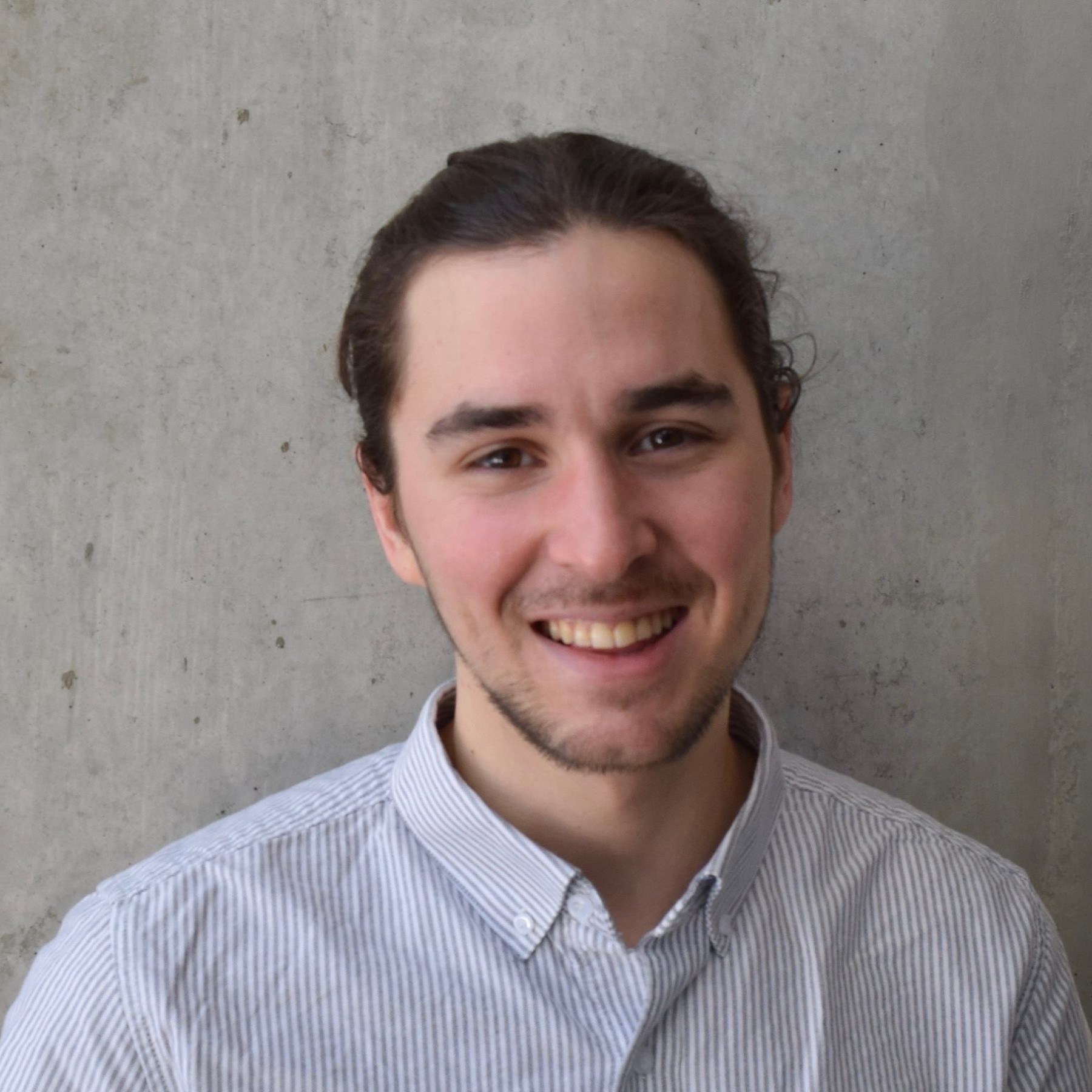

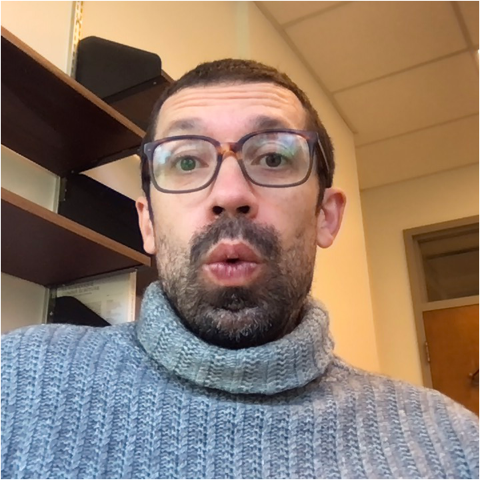

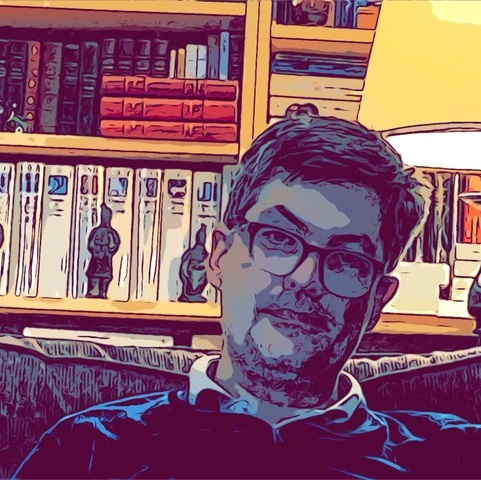

Postdoctoral researcher

Doctoral student | Information Studies

Graduate research trainee | Information Studies

Master's student | Physiology

Master's student | Information Studies

Prospective students

If you are interested in joining the Multimodal Interaction Lab, contact Prof. Guastavino in advance of the application deadline of the corresponding program. We are recruiting motivated graduate students in the PhD program in Information Studies, Master’s program in Information Studies project option as well as the M.A and Ph.D. in Music Technology.

We also invite advanced McGill undergraduate students from related fields (e.g. Cognitive Science, Psychology) to apply for volunteering opportunities, research courses or honours thesis related to our research projects.

Applicants with research experience in psychoacoustics (particularly spatial hearing), soundscape, multimodal user-centred design, and music information are especially encouraged to apply. To apply, e-mail Prof. Guastavino (catherine[DOT]guastavino[at]mcgill[DOT]ca) a brief description of your career goals, past research experience (including a sample publication), description of your research interests (in 1 or 2 paragraphs), and attach a CV (describing your academic and professional history, publication record, past and current GPAs, and awards received).

We strongly recommend that you apply for external fellowships/scholarships to support your graduate studies (e.g. NSERC, CIHR, SSHRC, or FRQ). Note that the deadlines for these fellowships/scholarships are in the fall semester of the year prior to the September in which graduate studies begin. International students who wish to pursue doctoral studies at McGill. can learn more about funding opportunities on the McGill Graduate and Postdoctoral Studies website. Applications are prepared with the supervisor’s input, and are typically due in the summer before the university application deadline. We typically provide financial support to students who make good progress in research, subject to the availability of research funding, chosen research topic, and external awards held.

Postdocs

We are recruiting a full-time postdoc to work on our Sound in Space project to work on dynamic sound localization for 1 or 2 years starting in Fall 2024 or Winter 2025. Applicants with expertise in psychoacoustics, spatial hearing, motion perception, and/or spatial audio are encouraged to apply.

If you are interested, e-mail Prof. Guastavino (catherine[DOT]guastavino[at]mcgill[DOT]ca) a brief description of your career goals, past experience in spatial hearing (including a sample publication), description of your research interests (1 or 2 paragraphs), and attach a CV (describing your academic and professional history, publication record, past and current GPAs, and awards received).

Postdoctoral awards are also available from the provincial and federal granting agencies. Please contact Prof. Guastavino to coordinate an application to one of these programs.

Di Croce, N., Bild, E., Steele, D., & Guastavino, C. (2024). A sonic perspective for the post-pandemic future of entertainment districts: the case of Montreal’s Quartier des Spectacles. Journal of Environmental Planning and Management, 67(1), 131–154. https://doi.org/10.1080/09640568.2022.2100247

Tarlao, C., Aumond, P., Lavandier, C., & Guastavino, C. (2023). Converging towards a French translation of soundscape attributes: Insights from Quebec and France. Applied Acoustics, 211, 109572. https://doi.org/10.1016/j.apacoust.2023.109572

Trudeau, C., Tarlao, C., & Guastavino, C. (2023). Montreal soundscapes during the COVID-19 pandemic: A spatial analysis of noise complaints and residents’ surveys. Noise Mapping, 10(1). https://doi.org/10.1515/noise-2022-0169

Tarlao, C., Steele, D., Blanc, G., & Guastavino, C. (2023). Interactive soundscape simulation as a co-design tool for urban professionals. Landscape and Urban Planning, 231, 104642. https://doi.org/10.1016/j.landurbplan.2022.104642

Steele, D., Bild, E., & Guastavino, C. (2023). Moving past the sound-noise dichotomy: How professionals of the built environment approach the sonic dimension. Cities, 132, 103974. https://doi.org/10.1016/j.cities.2022.103974

Trudeau, C., King, N., & Guastavino, C. (2023). Investigating sonic injustice: A review of published research. Social Science & Medicine, 326, 115919. https://doi.org/10.1016/j.socscimed.2023.115919

Yanaky, R., Tyler, D., & Guastavino, C. (2023). City Ditty: An Immersive Soundscape Sketchpad for Professionals of the Built Environment. Applied Sciences, 13(3), 1611. https://doi.org/10.3390/app13031611

Teboul, E. J. (2022). Postscript on the Societies of Control Voltages: Composing the Superstructure (Review of Audible Infrastructures, edited by Kyle Devine and Alexandra Boudreault-Fournier). Sound Studies, 8(1). https://www.tandfonline.com/doi/full/10.1080/20551940.2021.2005285

Teboul, E. J. (2022). Review of Sterephonica, by Gascia Ouzounian. Journal of Sonic Studies, 21. https://www.researchcatalogue.net/view/558982/1625023

Sullivan, J., Wanderley, M. M., & Guastavino, C. (2022). From Fiction to Function: Imagining New Instruments through Design Workshops. Computer Music Journal, 46(3), 26–47. https://doi.org/10.1162/comj_a_00644

Tarlao, C., & Guastavino, C. (2022). On the ecological validity of soundscape reproduction in laboratory settings.

Laplace, J., & Guastavino, C. (2022). Exploring sonic experiences in church spaces: a psycholinguistic analysis. The Senses and Society, 17(3), 343–358. https://doi.org/10.1080/17458927.2022.2139134

Bild, E., Steele, D., & Guastavino, C. (2022). Revisiting Public Space Transformations from a Sonic Perspective during the COVID-19 Pandemic. Built Environment, 48(2), 244–263. https://doi.org/10.2148/benv.48.2.244

Boissinot, E., Bogdanovitch, S., Bocksteal, A., & Guastavino, C. (2022). Effect of Hearing Protection Use on Pianists’ Performance and Experience: Comparing Foam and Musician Earplugs. Frontiers in Psychology, 13. https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2022.886861

Tarlao, C., Steele, D., & Guastavino, C. (2022). Assessing the ecological validity of soundscape reproduction in different laboratory settings. PLOS ONE, 17(6), e0270401. https://doi.org/10.1371/journal.pone.0270401

Fraisse, V., Giannini, N., Guastavino, C., & Boutard, G. (2022). Experiencing Sound Installations: A conceptual framework. Organised Sound, 27(2), 227–242. https://doi.org/10.1017/S135577182200036X

Boutard, G., Guastavino, C., Bernier, N., Gauthier, P.-A., Fraisse, V., Giannini, N., & Champagne, J. (2022). Review of Contemporary Sound Installation Practices in Québec. Resonance, 3(2), 177–193. https://doi.org/10.1525/res.2022.3.2.177

Bild, E., Steele, D., & Guastavino, C. (2022). Festivals and Events as Everyday Life in Montreal’s Entertainment District. Sustainability, 14(8), 4559. https://doi.org/10.3390/su14084559

Laplace, J., Bild, E., Trudeau, C., Perna, M., Dupont, T., & Guastavino, C. (2022). Encadrement du bruit environnemental au Canada. Canadian Public Policy, 48(1), 74–90. https://doi.org/10.3138/cpp.2021-009

Perna, M., Padois, T., Trudeau, C., Bild, E., Laplace, J., Dupont, T., & Guastavino, C. (2022). Comparison of Road Noise Policies across Australia, Europe, and North America. International Journal of Environmental Research and Public Health, 19(1), 173. https://doi.org/10.3390/ijerph19010173

Valentin, O., Gauthier, P.-A., Camier, C., Verron, C., Guastavino, C., & Berry, A. (2022). Perceptual validation of sound environment reproduction inside an aircraft mock-up. Applied Ergonomics, 98, 103603. https://doi.org/10.1016/j.apergo.2021.103603

Teboul, E. J. (2022). Review of Reminded By The Instruments by You Nakai. Computer Music Journal, 45(1), 85–90. https://direct.mit.edu/comj/article/45/1/85/110386/You-Nakai-Reminded-by-the-Instruments

Steele, D., Fraisse, V., Bild, E., & Guastavino, C. (2021). Bringing music to the park: The effect of Musikiosk on the quality of public experience. Applied Acoustics, 177, 107910. https://doi.org/10.1016/j.apacoust.2021.107910

Tarlao, C., Steffens, J., & Guastavino, C. (2021). Investigating contextual influences on urban soundscape evaluations with structural equation modeling. Building and Environment, 188, 107490. https://doi.org/10.1016/j.buildenv.2020.107490

Fraisse, V., Wanderley, M. M., & Guastavino, C. (2021). Comprehensive Framework for Describing Interactive Sound Installations: Highlighting Trends through a Systematic Review. Multimodal Technologies and Interaction, 5(4), 19. https://doi.org/10.3390/mti5040019

Bild, E., Steele, D., & Guastavino, and C. (2021). At Home in Montreal’s Quartier des Spectacles Festival Neighborhood. Journal of Sonic Studies, 21. https://www.researchcatalogue.net/view/1278472/1278473/0/0

Steele, D., & Guastavino, C. (2021). Quieted City Sounds during the COVID-19 Pandemic in Montreal. International Journal of Environmental Research and Public Health, 18(11), 5877. https://doi.org/10.3390/ijerph18115877

Tarlao, C., Fernandez, P., Frissen, I., & Guastavino, C. (2021). Influence of sound level on diners’ perceptions and behavior in a montreal restaurant. Applied Acoustics, 174, 107772. https://doi.org/10.1016/j.apacoust.2020.107772

Sullivan, J., Guastavino, C., & Wanderley, M. M. (2021). Surveying digital musical instrument use in active practice. Journal of New Music Research, 50(5), 469–486. https://doi.org/10.1080/09298215.2022.2029912

Malazita, J., Teboul, E. J., & Rafeh, H. (2020). Digital Humanities as Epistemic Cultures: How DH Labs Make Knowledge, Objects, and Subjects. Digital Humanities Quarterly, 14(3). https://www.digitalhumanities.org/dhq/vol/14/3/000465/000465.html

Trudeau, C., Steele, D., & Guastavino, C. (2020). A tale of three misters: the effect of water features on soundscape assessments in a Montreal public space. Frontiers in Psychology, 11. https://doi.org/10.3389/fpsyg.2020.570797.

Steele, D., Kerrigan, C., & Guastavino, C. (2020). Sounds in the city: Bridging the gaps from research to practice through soundscape workshops. Journal of Urban Design, 25(5), 646–664.

Steele, D., Bild, E., Tarlao, C., & Guastavino, C. (2019). Soundtracking the Public Space: Outcomes of the Musikiosk Soundscape Intervention. International Journal of Environmental Research and Public Health, 16(10), 1865. https://doi.org/10.3390/ijerph16101865

Axelsson, Ö., Guastavino, C., & Payne, S. R. (2019). Editorial: Soundscape Assessment. Frontiers in Psychology, 10. https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2019.02514

Roggerone, V., Vacher, J., Tarlao, C., & Guastavino, C. (2019). Auditory motion perception emerges from successive sound localizations integrated over time. Scientific Reports, 9(1), 16437. https://doi.org/10.1038/s41598-019-52742-0

Payne, S. R., & Guastavino, C. (2018). Exploring the Validity of the Perceived Restorativeness Soundscape Scale: A Psycholinguistic Approach. Frontiers in Psychology, 9. https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2018.02224

Féron, F.-X., & Guastavino, C. (2018). Étudier la musique sous toutes ses formes : la démultiplication des approches scientifiques en musicologie. Histoire De La Recherche Contemporaine. La Revue Du Comité Pour l’Histoire Du CNRS, Tome VII-N°1, 40–49. https://doi.org/10.4000/hrc.1940

Roque, J., Guastavino, C., Lafraire, J., & Fernandez, P. (2018). Plating influences diner perception of culinary creativity. International Journal of Gastronomy and Food Science, 11, 55–62. https://doi.org/10.1016/j.ijgfs.2017.11.006

Ayachi, F. S., Drouet, J.-M., Champoux, Y., & Guastavino, C. (2018). Perceptual Thresholds for Vibration Transmitted to Road Cyclists. Human Factors, 60(6), 844–854. https://doi.org/10.1177/0018720818780107

Steffens, J., Steele, D., & Guastavino, C. (2017). Situational and person-related factors influencing momentary and retrospective soundscape evaluations in day-to-day life. The Journal of the Acoustical Society of America, 141(3), 1414–1425. https://doi.org/10.1121/1.4976627

Saitis, C., Fritz, C., Scavone, G. P., Guastavino, C., & Dubois, D. (2017). Perceptual evaluation of violins: A psycholinguistic analysis of preference verbal descriptions by experienced musicians. The Journal of the Acoustical Society of America, 141(4), 2746–2757. https://doi.org/10.1121/1.4980143

Camier, C., Boissinot, J., & Guastavino, C. (2016). On the robustness of upper limits for circular auditory motion perception. Journal on Multimodal User Interfaces, 10(3), 285–298. https://doi.org/10.1007/s12193-016-0225-8

Romblom, D., Depalle, P., Guastavino, C., & King, R. (2016). Diffuse field modeling using physically-inspired decorrelation filters and B-format microphones: Part I Algorithm. Journal of the Audio Engineering Society, 64(4), 177–193. https://doi.org/10.17743/jaes.2015.0093

Teboul, E. J., & Stanford, S. (2016). Sonic Decay. International Journal of Žižek Studies, 9(1). https://zizekstudies.org/index.php/IJZS/article/view/899/904

Romblom, D., Guastavino, C., & Depalle, P. (2016). Perceptual thresholds for non-ideal diffuse field reverberation. The Journal of the Acoustical Society of America, 140(5), 3908–3916. https://doi.org/10.1121/1.4967523

Rummukainen, O., Romblom, D., & Guastavino, C. (2016). Diffuse field modeling using physically-inspired decorrelation filters adn B-format microphones: Part II Evaluation. Journal of the Audio Engineering Society, 64(4), 194–2017. https://doi.org/10.17743/jaes.2016.0002

Gauthier, P.-A., Camier, C., Lebel, F.-A., Pasco, Y., Berry, A., Langlois, J., Verron, C., & Guastavino, C. (2016). Experiments of multichannel least-square methods for sound field reproduction inside aircraft mock-up: Objective evaluations. Journal of Sound and Vibration, 376, 194–216. https://doi.org/10.1016/j.jsv.2016.04.027

Absar, R., & Guastavino, C. (2015). The design and formative evaluation of nonspeech auditory feedback for an information system. Journal of the American Society for Information Science and Technology. https://doi.org/10.1002/asi.23282

Luizard, P., Katz, B. F. G., & Guastavino, C. (2015). Perceptual thresholds for realistic double-slope decay reverberation in large coupled spaces. Journal of the Acoustical Society of America, 137(1), 75–85. https://doi.org/10.1121/1.4904515

Steffens, J., Steele, D., & Guastavino, C. (2015). Measuring momentary and retrospective soundscape evaluations in everyday life by means of the experience sampling method. The Brunswik Society Newsletter, 30, 45–48.

Ayachi, F., Dorey, J., & Guastavino, C. (2015). Identifying factors of bicycle comfort: An online survey with enthusiast cyclists. Applied Ergonomics, 46(A), 124–136.

Steffens, J., & Guastavino, C. (2015). Trends in momentary and retrospective soundscape judgments. Acta Acustica United with Acustica, 101, 713–722.

Fernandez, P., Aurouze, B., & Guastavino, C. (2015). Plating in gastronomic restaurants: A qualitative exploration of chefs’ perception. Menu, Journal of Food and Hospitality Research, 4, 16–21. http://recherche.institutpaulbocuse.com/en/publications/publications-4464.kjsp

Luizard, P., Katz, B. F. G., & Guastavino, C. (2015). Perceived suitability of reverberation in large coupled volume concert halls. Psychomusicology: Music, Mind, and Brain, 25(3), 317–325. https://doi.org/10.1037/pmu0000109

Frissen, I., Féron, F. X., & Guastavino, C. (2014). Auditory velocity discrimination in the horizontal plane at very high velocities. Hearing Research, 316, 94–101.

Frissen, I., & Mars, F. (2014). The effect of visual degradation on anticipatory and compensatory steering control. The Quarterly Journal of Experimental Psychology, 67(3), 499–507. http://www.tandfonline.com/doi/abs/10.1080/17470218.2013.819518

Frissen, I., & Guastavino, C. (2014). Do whole-body vibrations affect spatial hearing? Ergonomics, 57(7), 1090–1101.

Boutard, G. (2014). Towards Mixed Methods Digital Curation: Facing specific adaptation in the artistic domain. Archival Science.

Rosenblum, A. L., Burr, G., & Guastavino, C. (2013). Survey: Adoption of Published Standards in Cylinder and 78rpm Disc Digitization. International Association of Sound and Audiovisual Archives Journal, 41, 40–55.

Pras, A., Guastavino, C., & Lavoie, M. (2013). The impact of technological advances on recording studio practices. Journal of the American Society for Information Science and Technology, 64(3), 612–626.

Pras, A., Cance, C., & Guastavino, C. (2013). Record producers’ best practices for artistic direction – From light coaching to deeper collaboration with musicians. Journal of New Music Research, 42(4), 381–395.

Julien, C.-A., Tirilly, P., Dinneen, J. D., & Guastavino, C. (2013). Reducing subject tree browsing complexity. Journal of the American Society for Information Science and Technology, 64(11), 2201–2223.

Boutard, G. (2013). The re-performance of digital archives of contemporary music with live electronics: a theoretical and practical preservation framework [Doctoral Thesis, McGill University]. http://digitool.library.mcgill.ca/R/-?func=dbin-jump-full&object_id=117132&silo_library=GEN01

Pras, A., & Guastavino, C. (2013). Impact of producers’ comments and musicians’ self evaluation on perceived recording quality. Music, Technology & Education, 6(1), 81–101.

Boutard, G., Guastavino, C., & Turner, J. M. (2013). A Digital Archives Framework for the Preservation of Artistic Works with Technological Components. International Journal of Digital Curation, 8(1), 42–65.

Verron, C., Gauthier, P.-A., Langlois, J., & Guastavino, C. (2013). Spectral and spatial multichannel analysis/synthesis of interior aircraft sounds. IEEE Transactions on Audio, Speech, and Language Processing, 21(7), 1317–1329.

Boutard, G., & Marandola, F. (2013). Méthode et application de la documentation des œuvres mixtes en vue de leur préservation et de leur diffusion. Circuit : Musiques Contemporaines, 22(4), 37–47.

Julien, C.-A., Tirilly, P., Leide, J. E., & Guastavino, C. (2012). Constructing a True LCSH Tree of a Science and Engineering Collection. Journal of the American Society for Information Science and Technology, 63(12), 2405–2418.

Boutard, G., & Guastavino, C. (2012). Following Gesture Following: Grounding the Documentation of a Multi-Agent Creation Process. Computer Music Journal, 36(4), 59–80.

Julien, C.-A., Guastavino, C., & Bouthillier, F. (2012). Capitalizing on Information Organization and Information Visualization for a New-Generation Catalogue. Library Trends, 6(1), 148–161.

Frissen, I., Ziat, M., Campion, G., Hayward, V., & Guastavino, C. (2012). The effect of voluntary movements on auditory-haptic and haptic-haptic temporal order judgments. Acta Psychologica, 141, 140–148.

Boutard, G., & Guastavino, C. (2012). Archiving electroacoustic and mixed music: significant knowledge involved in the creative process of works with spatialisation. Journal of Documentation, 68(6), 749–771.

Goldszmidt, S., & Boutard, G. (2012). De la documentation d’une œuvre de musique mixte. Les Cahiers Du Numérique, 8(4), 119–141.

Guastavino, C., & Boutard, G. (2012). Preservation d’artefacts culturels numériques – Introduction. Les Cahiers Du Numériques, 8(4), 9–12.

Giordano, B., Guastavino, C., Murphy, E., Ogg, M., Smith, B. K., & McAdams, S. (2011). Comparison of Dissimilarity Estimation Methods. Multivariate Behavioral Research, 46, 1–33.

Pras, A., & Guastavino, C. (2011). The role of music producers and sound engineers in the current recording context, as perceived by young professionals. Musicae Scientiae, 15(1), 73–95.

Murphy, E., Lagrange, M., Scavone, G., Depalle, P., & Guastavino, C. (2011). Perceptual evaluation of rolling sound synthesis. Acta Acustica United with Acustica, 97(5), 840–851.

Féron, F. X., Frissen, I., Boissinot, J., & Guastavino, C. (2010). Upper limits of auditory rotational motion perception. The Journal of the Acoustical Society of America, 128(6), 3703–3714. https://doi.org/10.1121/1.3502456

Souman, J. L., Giordano, P. R., Frissen, I., Luca, A. D., & Ernst, M. O. (2010). Making virtual walking real: Perceptual evaluation of a new treadmill control algorithm. ACM Transactions on Applied Perception, 7(2).

Guastavino, C., Gómez, F., Toussaint, G., Marandola, F., & Gómez, E. (2009). Measuring Similarity between Flamenco Rhythmic Patterns. Journal of New Music Research, 38(2), 175–184.

Passamonti, C., Frissen, I., & Làdavas, E. (2009). Visual recalibration of auditory spatial perception: two separate neural circuits for perceptual learning. European Journal of Neuroscience, 30(6), 1141–1150.

Souman, J. L., Frissen, I., Sreenivasa, M. N., & Ernst, M. O. (2009). Walking Straight into Circles. Current Biology, 19(18), 1538–1542.

Féron, F. X. (2009). John Zorn ou l’abolition des frontières musicales. L’Éducation Musicale, 559, 4–8.

Julien, C. A., & Cole, C. (2009). Capitalizing on Controlled Subject Vocabulary by Providing a Map of Main Subject Headings : an Exploratory Design Study. Canadian Journal of Information and Library Sciences, 33(1-2), 67–83.

Sreenivasa, M., Frissen, I., Souman, J., & Ernst, M. (2008). Walking along curved paths of different angles: the relationship between head and trunk turning. Experimental Brain Research, 191, 313–320.

Julien, C. A., Leide, J., & Bouthillier, F. (2008). Controlled user evaluations of information visualization interfaces for text retrieval: Literature review and meta-analysis. Journal of the American Society for Information Science and Technology, 59(6), 1012–1024.

Bonardi, A., Barthélémy, J., Boutard, G., & Ciavarella, R. (2008). Préservation de processus temps réel: Vers un document numérique. Document Numérique, 11(3-4), 59–80.

Pirhonen, A., & Murphy, E. (2008). Designing for the unexpected: the role of creative group work for emerging interaction design paradigms. Visual Communication, 7(3), 331–344.

Julien, C. A., & Bouthillier, F. (2008). Le catalogue réinventé. Documentation Et Bibliothèques, 54(3), 229–239.

Murphy, E., Kuber, R., McAllister, G., Strain, P., & Yu, W. (2008). An empirical investigation into the difficulties experienced by visually impaired Internet users. Universal Access in the Information Society, 7, 79–91.

Verfaille, V., Guastavino, C., & Depalle, P. (2007). Evaluation de modèles de vibrato. Les Cahiers De La Société Québécoise De Recherche En Musique, 9(1-2), 49–62.

Guastavino, C. (2007). Categorization of environmental sounds. Canadian Journal of Experimental Psychology, 60(1), 54–63.

Guastavino, C. (2006). The ideal urban soundscape: Investigating the sound quality of French cities. Acta Acustica United with Acustica, 92(6), 945–951.

Guastavino, C., & Cheminée, P. (2006). Basses fréquences en milieu urbain: qu’en disent les citadins. Acoustique Et Techniques, 44, 39–45.

Dubois, D., Guastavino, C., & Raimbault, M. (2006). A cognitive approach to soundscape: Using verbal data to access everyday life auditory categories. Acta Acustica United with Acustica, 92(6), 865–874.

Yanaky, R., Grazioli, G., Zhang, Y.-Y., & Guastavino, C. (2023). Exploring the use of soundscape sketchpads with professionals. INTER-NOISE and NOISE-CON Congress and Conference Proceedings, 268, 2188–2197.

Yanaky, R., & Guastavino, C. (2023). City Ditty – First evaluations of a new immersive audio-visual soundscape sketchpad.

Yanaky, R., & Guastavino, C. (2023). Using immersive planning tools to reimagine virtual libraries. Proceedings of the Annual Conference of CAIS/Actes Du Congrès Annuel De l’ACSI.

Laplace, J., & Guastavino, C. (2023, September). The restorativeness of churches. 10th Convention of the European Acoustics Association.

Bild, E., & Guastavino, C. (2023, May). ListenUpMTL / ÉcoutezMTL: sonic cohabitation in downtown Montreal. 47th Annual Conference of the Society for the Study of Architecture in Canada.

Laplace, J., & Guastavino, C. (2023). Evaluating the restorative potential of church buildings. INTER-NOISE and NOISE-CON Congress and Conference Proceedings, 265, 5206–5218.

Fraisse, V., Schütz, N., Guastavino, C., Wanderley, M., & Misdariis, N. (2023). Informing sound art design in public space through soundscape simulation. INTER-NOISE and NOISE-CON Congress and Conference Proceedings, 265, 3015–3024.

Boissinot, E., Bogdanovitch, S., Bocksteal, A., & Guastavino, C. (2023). Effect of Hearing Protection Use on Pianists’ Performance and Experience: Comparing Foam and Musician Earplugs. International Symposium on Performance Science 2021, 200.

Laplace, J., & Guastavino, C. (2023, May). Les effets de l’architecture religieuse en contexte séculier. 47th Annual Conference of the Society for the Study of Architecture in Canada.

Fraisse, V., Schütz, N., Wanderley, M., Guastavino, C., & Misdariis, N. (2022, July). Planning and evaluating the impact of a sound installation in a Parisian public space. Proceedings of the International Geographic Union Centennial Congress.

Yanaky, R., & Guastavino, C. (2022). Addressing transdisciplinary challenges through technology: Immersive soundscape planning tools. Proceedings of the Annual Conference of CAIS/Actes Du Congrès Annuel De l’ACSI.

Werner, K. J., Teboul, E. J., Cluett, S. A., & Azelborn, E. (2022). Modelling and Extending the RCA Mark II Synthesizer. Proceedings of the Digital Audio Effects (DAFX) Conference. https://www.dafx.de/paper-archive/2022/papers/DAFx20in22_paper_39.pdf

Botteldooren, D., Van Renterghem, T., Guastavino, C., Can, A., Fiebig, A., Wunderli, J.-M., Kang, J., & Aletta, F. (2021). Abstracts of the Second Urban Sound Symposium. Proceedings, 72(1), 4. https://doi.org/10.3390/proceedings2021072004

Yanaky, R., & Guastavino, C. (2021, April). Soundscape Design Tools for Professionals.

Bild, E., Steele, D., Yanaky, R., Tarlao, C., Di Croce, N., & Guastavino, C. (2021, April). Sound fundamentals for professionals of the built environment: a course for citymakers.

Werner, K. J., & Teboul, E. J. (2021). Analyzing a Unique Pingable Circuit: The Gamelan Resonator. Proceedings of the 151st Audio Engineering Society Convention. https://www.aes.org/e-lib/browse.cfm?elib=21506

Laplace, J., & Guastavino, C. (2021, May). Sound in space and time: exploring sensory experiences in churches.

Fraisse, V., Guastavino, C., & Wanderley, M. M. (2021). A Visualization Tool to Explore Interactive Sound Installations. NIME 2021.

Trudeau, C., & Guastavino, C. (2021). The environmental inequality of urban sound environments: a comparative analysis. Proceedings of the International Commission on the Biological Effects of Noise.

Trudeau, C., Bild, E., Padois, T., Perna, M., Dumoulin, R., Dupont, T., & Guastavino, C. (2020). Municipal noise regulations in Québec. Proceedings of Forum Acusticum.

Trudeau, C., Steele, D., Dumoulin, R., & Guastavino, C. (2018). Sounds in the city: differences in urban noise management strategies across cities. Proceedings of Inter-Noise 2018.

Trudeau, C., & Guastavino, C. (2018). Classifying soundscape using a multifaceted taxonomy. Proceedings of the Euronoise 2018.

Tarlao, C., Steele, D., Fernandez, P., & Guastavino, C. (2016). Comparing soundscape evaluations in French and English across three studies in Montreal. Invited Paper at the 45th International Congress on Noise Control Engineering.

Steffens, J., Steele, D., & Guastavino, C. (2016). Der Einfluss von Musik auf die Bewertung der Umwelt. Fortschritte Der Akustik DAGA 2016.

Drouet, J.-M., Guastavino, C., & Girard, N. (2016). Perceptual thresholds for shock-type excitation of the front wheel of a road bicycle at the cyclist’s hands. Proceedings of the 11th Conference of the International Sports Engineering Association. https://doi.org/10.1016/j.proeng.2016.06.264

Bild, E., Steele, D., Tarlao, C., Guastavino, C., & Coler, M. (2016). Sharing music in public spaces: Social insights from the Musikiosk project (Montreal, CA). Invited Paper at the 45th International Congress on Noise Control Engineering.

Roque, J., Fernandez, P., Lafraire, J., & Guastavino, C. (2016). La créativité culinaire perçue par le mangeur en contexte de restauration gastronomique. Proceedings of Symposium Études Du Fait Alimentaire En Amérique.

Lavoie, M., Dorey, J., & Guastavino, C. (2016). How do enthusiast cyclists conceptualize road bicycle comfort? Proceedings of the Annual Conference of the North American Society for the Psychology of Sport and Physical Activity.

Ayachi, F. S., Champoux, Y., Drouet, J.-M., & Guastavino, C. (2016). Just noticeable differences for whole-body vibration transmitted on a road bicycle. Proceedings of the Annual Conference of the North American Society for the Psychology of Sport and Physical Activity.

Steffens, J., Steele, D., & Guastavino, C. (2016). Music influences the perception of our acoustic and visual environment. Invited Paper at the 45th International Congress on Noise Control Engineering.

Steele, D., Tarlao, C., Bild, E., & Guastavino, C. (2016). Evaluation of an urban soundscape intervention with music: Quantitative results from questionnaires. Invited Paper at the 45th International Congress on Noise Control Engineering.

Steele, D., Bild, E., Tarlao, C., Luque Martín, I., Izquierdo Cubero, J., & Guastavino, C. (2016). A comparison of soundscape evaluation methods in a large urban park in Montreal. Invited Paper at the 22nd International Congress on Acoustics.

Juge, G., Pras, A., & Frissen, I. (2016). Perceptual evaluation of Transpan for 5.1 mixing of acoustic recordings. Audio Engineering Society Convention 140. http://www.aes.org/e-lib/browse.cfm?elib=18288

Steffens, J., Steele, D., & Guastavino, C. (2015). New insights into soundscape evaluations using the experience sampling method. Invited Paper Presented at Euronoise 2015.

Steffens, J., Steele, D., & Guastavino, C. (2015). Die Experience Sampling Methode – Ein Werkzeugder Zukunft für die Soundscape-Forschung? (The Experience Sampling Method – A tool of the future for Soundscape research). In Proceedings of the German Acoustics Conference.

Ziat, M., Savord, A., & Frissen, I. (2015). The effect of visual, haptic, and auditory signals perceived from rumble strips during inclement weather. World Haptics Conference (WHC), 2015 IEEE, 351–355. http://ieeexplore.ieee.org/xpls/abs_all.jsp?arnumber=7177737

Steele, D., Steffens, J., & Guastavino, C. (2015). The role of activity in urban soundscape evaluations. Invited Paper Presented at Euronoise 2015.

Camier, C., Boissinot, J., & Guastavino, C. (2015). Does reverberation affect upper limits for auditory motion perception? Proceedings of International Conference on Auditory Display 2015.

Camier, C., Féron, F.-X., Boissinot, J., & Guastavino, C. (2015). Tracking moving sounds: perception of spatial figures. Proceedings of International Conference on Auditory Display 2015.

Steele, D., Dumoulin, R., Voreux, L., Gautier, N., Glaus, M., Guastavino, C., & Voix, J. (2015). Musikiosk: A soundscape intervention and evaluation in an urban park. Proceedings of the 59th International Conference of the Audio Engineering Society.

Steffens, J., Steele, D., & Guastavino, C. (2015). How does music affect soundscape evaluation? Proceeding of the Ninth Triennial Conference of the European Society for the Cognitive Sciences of Music,

Blum, J. R., Frissen, I., & Cooperstock, J. R. (2015). Improving Haptic Feedback on Wearable Devices through Accelerometer Measurements. Proceedings of the 28th Annual ACM Symposium on User Interface Software & Technology, 31–36. http://dl.acm.org/citation.cfm?id=2807474

Kroher, N., Guastavino, C., Gomez, E., Bonada, J., & Gomez-Martin, F. (2014). Computational models for perceived melodic similarity in a cappella flamenco singing. Proceedings of the 15th International Society for Music Information Retrieval Conference 2014.

Steffens, J., & Guastavino, C. (2014). Looking back by looking into the future –The role of anticipation and trend effects in retrospective judgments of musical excitement. Congress of the International Association of Empirical Aesthetics (IAEA).

Fernandez, P., Aurouze, B., & Guastavino, C. (2014). Plating in gastronomic cuisine: A qualitative exploration of chefs’ perception. Context Effects on Consumer Judgments.

Steffens, J., Petrenko, J., & Guastavino, C. (2014). Comparison of Momentary and Retrospective Soundscape Evaluations. Invited Paper at the 167th Meeting of the Acoustical Society of America.

Payne, S. R., & Guastavino, C. (2013). Measuring the perceived restorativeness of soundscapes. Invited Paper at the 42nd International Congress and Exposition on Noise Control Engineering.

Neilbo, F., Steele, D., & Guastavino, C. (2013). Investigating soundscape affordances through activity appropriateness. Proc. ICA 2013, 19, 040059.

Weigl, D. M., Sears, D., Hockman, J. A., McAdams, S., & Guastavino, C. (2013). Investigating the Effects of Beat Salience on Beat Synchronization Judgements during Music Listening. Conference Program of the Biennial Meeting of the Society for Music Perception and Cognition.

Ziat, M., Frissen, I., Campion, G., Hayward, V., & Guastavino, C. (2013). Plucked String Stiffness Affects Loudness Perception. International Workshop on Haptic and Audio Interaction Design, 79–88. http://link.springer.com/chapter/10.1007/978-3-642-41068-0_9

Steele, D., Krijnders, D., & Guastavino, C. (2013). The Sensor City Initiative: cognitive sensors for soundscape transformations. Proceedings of GIS Ostrava 2013: Geoinformatics for City Transformations.

Dorey, Jonathan, Ayachi, S., & Guastavino, Catherine. (2013). The information world of enthusiast cyclists. Proceedings of the 41st Annual Meeting of the Canadian Association for Information Science.

Weigl, D. M., & Guastavino, C. (2013). Applying the Stratified Model of Relevance Interactions to Music Information Retrieval. 76th Annual Meeting of the Association for Information Science and Technology.

Luizard, P., Katz, B., & Guastavino, Catherine. (2013). Perception of reverberation in large coupled volumes: discrimination and suitability. Proceedings of the International Symposium on Room Acoustics.

Romblom, D., King, R., & Guastavino, C. (2013). A perceptual evaluation of recording, rendering and reproduction techniques for multichannel spatial audio. Proceedings of the 135th Convention of the Audio Engineering Society.

Weigl, D. M., Sears, D., & Guastavino, C. (2013). Examining the reliability and predictive validity of beat salience judgements. 3rd Seminar on Cognitively Based Music Informatics Research Abstracts.

Boutard, G., & Guastavino, C. (2013). The Performing World of Digital Archives. Actes De La 4ème Conférence Document Numérique Et Société, 13–24.

Boutard, G., & Marandola, F. (2013). Documentation des processus créatifs pour la préservation et la dissémination des œuvres mixtes. Proceedings of Tracking the Creative Process in Music 2013, 35–37.

Romblom, D., King, R., & Guastavino, C. (2013). A perceptual evaluation of room effect methods for multichannel spatial audio. Proceedings of the 135th Convention of the Audio Engineering Society.

Steele, D., & Guastavino, C. (2013). How do urban planners conceptualize and contextualize soundscape in their everyday work? Invited Paper at the 42nd International Congress and Exposition on Noise Control Engineering.

Boutard, G., Guastavino, C., & Turner, J. M. (2012). Digital sound processing preservation: impact on digital archives. Proceedings of the 2012 IConference, 177–182.

Julien, C.-A., Tirilly, P., Leide, J. E., & Guastavino, C. (2012, January). Exploiting major trends in subject hierarchies for large-scale collection visualization.

Steele, D., Luka, N., & Guastavino, C. (2012, March). Closing the gaps between soundscape and urban designers. Proceedings of Acoustics 2012, Joint Meeting of the 2012 IOA Annual Meeting and the 11th Congrès Français d’Acoustique.

Romblom, D., Guastavino, C., & King, R. (2012, October). A comparison of recording, rendering and reproduction techniques for multichannel spatial audio. Proceedings of the 133rd Convention of the Audio Engineering Society (AES 2012).

Féron, F. X., & Boutard, G. (2012, April). L’(a)perception de l’électronique par les interprètes dans les œuvres mixtes en temps réel pour instrument seul. http://musiquemixte.sfam.org/?page_id=105

Julien, C.-A., Tirilly, P., Leide, J. E., & Guastavino, C. (2012). Using the LCSH hierarchy to browse a collection. Proceedings of the 12th International ISKO (International Society for Knowledge Organization) Conference.

Frissen, I., & Guastavino, C. (2012, March). Effects of whole-body vibrations on auditory localization. Proceedings of Acoustics 2012, Joint Meeting of the 2012 IOA Annual Meeting and the 11th Congrès Français d’Acoustique.

Gómez, E., Guastavino, C., Gómez, F., & Banada, J. (2012, July). Analyzing Melodic Similarity Judgments in Flamenco a Cappella Singing. Proceedings of the Joint 12th International Conference on Music Perception and Cognition (ICMPC) and 8th Triennial Conference of the European Society for the Cognitive Sciences of Music (ESCOM).

Saitis, C., Guastavino, C., Giordano, B. L., Fritz, C., & Scavone, G. (2012, July). Investigating consistency in verbal descriptions of violin preference by experienced players. Proceedings of the Joint 12th International Conference on Music Perception and Cognition (ICMPC) and 8th Triennial Conference of the European Society for the Cognitive Sciences of Music (ESCOM).

Weigl, D. M., Guastavino, C., & Levitin, D. J. (2012, August). The Effect of Rhythmic Distortion on Melody Recognition.

Botteldooren, D., Lavandier, C., Preis, A., Dubois, D., Aspuru, I., Guastavino, C., Brown, L., Nilsson, M., & Andringa, T. C. (2011). Understanding urban and natural soundscapes. Proceedings of Forum Acusticum 2011.

Cance, C., & Guastavino, C. (2011). User-centered methodologies to evaluate digital musical instruments. Paper Presented at the Conference The Ghost in the Machine: Technologies, Performance, Publics.

Pras, A., & Guastavino, C. (2011). The impact of producers’ comments and musicians’s self-evaluation on performance during recording sessions. Proceedings of the 131st Convention of the Audio Engineering Society.

Weigl, D. M., Guastavino, C., & Levitin, D. J. (2011). The effect of rhythmic distortion on melody recognition. Conference Program of the 2011 Biennal Meeting of the Society for Music Perception and Cognition.

Cance, C., Guastavino, C., Malloch, J., & Wanderley, M. M. (2011). Investigating the notion of instrumentality for digital music instruments. Panel Presented at the Conference The Ghost in the Machine: Technologies, Performace, Publics.

Verron, C., Gauthier, P.-A., Langlois, J., & Guastavino, C. (2011). Binaural analysis/synthesis of interior aircraft sounds. 2011 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics.

Langlois, J., Verron, C., Gauthier, P.-A., & Guastavino, C. (2011). Perceptual Evaluation of interior aircraft sound models. 2011 IEEE Workshop on Applications of Signal Processing to Audio and Acoustics.

Hockman, J. A., Weigl, D. M., Guastavino, C., & Fujinaga, I. (2011). Discrimination Between Phonograph Playback Systems. Audio Engineering Society Convention 131.

Cance, C., Guastavino, C., & Dubois, D. (2011). Languages and conceptualizations of soundscapes: A cross-linguistic analysis. Proceedings of the the 162nd Meeting of the Acoustical Society of America, Oct. 31- Nov. 4, 130 (4), 2495.

Dorey, J., & Guastavino, C. (2011). Moving forward: Conceptualizing comfort in information sources for enthusiast cyclists. Proceedings of the American Society for Information Science and Technology, 48, 1–9.

Boutard, G., & Guastavino, C. (2011). Informer les archives des musiques électroacoustiques et mixtes par les processus de création. Proceedings of Tracking the Creative Process in Music 2011.

Guastavino, C., & Pijanowski, J. (2011). Soundscape ecology: a worldwide network. Invited Paper at the 162nd Meeting of the Acoustical Society of America, Oct. 31- Nov. 4, 130 (4), 2531.

Pras, A., & Guastavino, C. (2011). Diriger l’écoute afin d’enregistrer la meilleure performance possible. Proceedings of Tracking the Creative Process in Music.

Weigl, D. M., & Guastavino, C. (2011). User Studies in the Music Information Retrieval Literature. Proceedings of the 12th International Society for Music Information Retrieval Conference.

Absar, R., & Guastavino, C. (2011). Nonspeech sound design for a hierarchical information system. Proceedings of the 14th International Conference on Human Computer Interaction (HCI International 2011), 461–470.

Daniel, A., Guastavino, C., & McAdams, S. (2010, April). Effet du rapport signal-sur-bruit sur l’angle minimum audible en présence d’un son distracteur. Proceedings of the 10th Congrès Français d’Acoustique (CFA10).

Bouchara, T., Katz, B., Jacquemin, C., & Guastavino, C. (2010, June). Audio-visual renderings for multimedia navigation. Proceedings of the 16th Internation Conference on Auditory Display (ICAD-2010).

Payne, S. R. (2010). Urban Park soundscapes and their perceived restorativeness. Proceedings of the Institute of Acoustics, 264–271.

Pras, A., & Guastavino, C. (2010, May). Sampling rate discrimination: 44.1 kHz vs. 88.2 kHz. Proceedings of the 128th Convention of the Audio Engineering Society (AES).

Julien, C. A., Guastavino, C., Bouthillier, F., & Leide, J. (2010). Subject Explorer 3D: a Virtual Reality collection browsing and searching tool. Proceedings of the Annual Conference of the Canadian Association for Information Science. http://www.cais-acsi.ca/proceedings/2010/CAIS062_JulienGuastavinoBouthillierLeide_Final.pdf

Bouchara, T., Giordano, B., Frissen, I., Katz, B., & Guastavino, C. (2010, May). Effect of Signal-to-Noise Ratio and Visual Context on Environmental Sound Identification. Proceedings of the 128th Convention of the Audio Engineering Society (ID 173).

Payne, S. R., & Guastavino, C. (2010, June). Back to Basics. Considering the composition and terminology of Perceived Restorativeness (Soundscape) Scales. Proceeding of the 21st International Association People-Environment Studies.

Rosenblum, A., Guastavino, C., & Burr, G. (2010, November). Best Practices in the Preservation and Digitisation of 78rpm Discs and Cylinder Recordings. Proceedings of the 41st Annual Conference of the Association of Sound and Audiovisual Archives (IASA).

Pras, A., Zimmerman, R., Levitin, D. J., & Guastavino, C. (2009, October). Subjective evaluation of mp3 compression for different musical genres. Proceedings of the 127th Convention of the Audio Engineering Society (AES).

Frissen, I., Katz, B., & Guastavino, C. (2009, May). Perception of reverberation in large single and coupled volumes. Electronic Proceedings of the 15th International Conference on Auditory Display (ICAD 2009).

Giordano, B., Guastavino, C., Murphy, E., Ogg, M., Smith, B., & McAdams, S. (2009, November). Common and distinctive features of similarity: Effect of behavioral method. Proceedings of the Annual Meeting of the Psychonomic Society.

Féron, F. X., Frissen, I., & Guastavino, C. (2009, May). Upper limits of auditory motion perception: the case of rotating sounds. Electronic Proceedings of the 15th International Conference on Auditory Display (ICAD 2009).

Pras, A., & Guastavino, C. (2009, August). Improving the sound quality of recordings through communication between musicians and sound engineers. Electronic Proceedings of the 2009 International Computer Music Conference (ICMC).

Julien, C. A., Guastavino, C., & Bouthillier, F. (2009, November). A Future Generation OPAC in Virtual Reality / Un catalogue prochaine génération en réalité virtuelle.

Pras, A., Corteel, E., & Guastavino, C. (2009, March). Qualitative evaluation of Wave Field Synthesis with expert listeners. Invited Paper at the NAG-DAGA International Conference on Acoustics.

Pras, A., Féron, F. X., & Demers, K. (2009, August). The pre-production process of New York Counterpoint for clarinet and tape written by Steve Reich. Electronic Proceedings of the 2009 International Computer Music Conference (ICMC).

Frissen, I., Ziat, M., Campion, G., Hayward, V., & Guastavino, C. (2009, July). Auditory-tactile temporal order judgments during active exploration. Electronic Proceedings of International Multisensory Research Forum 2009 (IMRF’09).

Dubois, D., & Guastavino, C. (2008, July). Noise(s) and sound(s): comparing various conceptualizations of acoustic phenomena across languages. Invited Paper at Acoustics ’08 (Joint Meeting of the 155th Meeting of the Acoustical Society of America, 5th Forum Acusticum, and 9th Congres Francais d’Acoustique).

Absar, R., & Guastavino, C. (2008, June). Usability of non-speech sounds in user interfaces. Proceedings of the 14th International Conference on Auditory Display (ICAD ’08).

Guastavino, C., & Dubois, D. (2008, July). Soundscapes : from noise annoyance to the music of urban life. Invited Paper at Acoustics ’08 (Joint Meeting of the 155th Meeting of the Acoustical Society of America, 5th Forum Acusticum, and 9th Congres Francais d’Acoustique).

Verfaille, V., Guastavino, C., & Depalle, P. (2008, July). Control parameters of a generalized vibrato model with modulations of harmonics and residual. Invited Paper at Acoustics ’08 (Joint Meeting of the 155th Meeting of the Acoustical Society of America, 5th Forum Acusticum, and 9th Congres Francais d’Acoustique).

Benovoy, M., Zadel, M., & Absar, R. (2008, August). Towards multimodal immersive gameplay. Proceedings of Eurosis GAMEON-NA 2008.

Bonardi, A., Barthélémy, J., Ciavarella, R., & Boutard, G. (2008). Will software modules for performing arts be sustainable? Proceedings of the 14th IEEE Mediterranean Electrotechnical Conference MELECON’08.

Bonardi, A., Barthélémy, J., Boutard, G., & Ciavarella, R. (2008). First Steps in Research and Development about the Sustainability of Software Modules for Performing Arts. Proceedings of Journées d’Informatique Musicale JIM’08.

Guastavino, C., Marandola, F., Toussaint, G., Gomez, F., & Absar, R. (2008, July). Perception of Rhythmic Similarity in Flamenco Music: Comparing Musicians and Non-Musicians. Proceedings of the 4th Conference on Interdisciplinary Musicology (CIM ’08).

Murphy, E., Lagrange, M., Scavone, G., Depalle, P., & Guastavino, C. (2008, September). Perceptual Evaluation of a Real-time Synthesis Technique for Rolling Sounds. Proceedings of Enactive ’08.

Murphy, E., Moussette, C., Véron, C., & Guastavino, C. (2008, August). Design and Evaluation of an Audio-Haptic Interface. Proceedings of ENTERFACE ’08 Workshop.

Guastavino, C., Toussaint, G., Gomez, F., Marandola, F., & Absar, R. (2008, July). Rhythmic Similarity in Flamenco Music: Comparing Psychological and Mathematical Measures. Proceedings of the 4th Conference on Interdisciplinary Musicology (CIM ’08).

Ng, K., Mikroyannidis, A., Ong, B., Bonardi, A., Barthélémy, J., Ciavarella, R., & Boutard, G. (2008, August). Ontology Management for Preservation of Interactive Multimedia Performances. Proceedings of International Computer Music Conference ICMC’08.

Arseneau, F., & Guastavino, C. (2008, June). Différentes conceptions de l’expression "information design" selon les disciplines et champs d’expertise: analyse de la littérature anglophone. Proceedings of CAIS/ACSI 2008 : 36th Annual Conference of the Canadian Association for Information Science = 36e Congrès De l’Association Canadienne Des Sciences De l’Information.

Barthélémy, J., Bonardi, A., Boutard, G., & Ciavarella, R. (2008, August). Our Research for Lost Route to Root. Proceedings of International Computer Music Conference ICMC’08.

Dubois, D., & Guastavino, C. (2007, September). Cognitive evaluation of sound quality : Bridging the gap between acoustic measurements and meanings. Invited Paper at the 19th International Congress on Acoustics - ICA07.

Absar, R., Gomez, F., Guastavino, C., Marandola, F., & Toussaint, G. (2007). Perception of meter similarity in flamenco music. Proceedings of the 2007 Annual Conference of the Canadian Acoustical Association (CAA), 46–47.

Salimpoor, V. N., Guastavino, C., & Levitin, D. J. (2007, October). Subjective evaluation of popular audio compression formats. Proceedings of the 123rd Audio Engineering Society (AES) Convention.

Guastavino, C., & Belin, P. (2007, September). Voice categorization using free sorting tasks.

Guastavino, C., Larcher, V., Catusseau, G., & Boussard, P. (2007, June). Spatial audio quality evaluation: Comparing transaural, ambisonics and stereo. Invited Paper at the 13th International Conference on Auditory Display – ICAD 2007.

Guastavino, C., & Verfaille, V. (2007, August). Perceptual Evaluation of Vibrato Features: The Case of Saxophone Sounds.

Julien, C. A., Bouthillier, F., & Leide, J. (2006). Spatialized Information Visualizations: a "BASSTEP" Approach to Application Design. Proc. of Annual Conf. of the American Society for Information Science and Technology.

Verfaille, V., Guastavino, C., & Traube, C. (2006). An interdisciplinary approach to audio effect classification. Proceedings of the 9th DAFx (Digital Audio Effects) Conference, 107–114.

Guastavino, C., & Dubois, D. (2006, December). From language and concepts to physics: How do people cognitively process soundscapes? Invited Paper at the at the Internoise 2006 Congress.

Dubois, D., & Guastavino, C. (2006, December). In search for soundscape indicators: Physical descriptors of semantic categories. Invited Paper at the at the Internoise 2006 Congress.

Bouthillier, F., Jin, T., & Julien, C. A. (2006). The Use of Competitive Intelligence Technology by Competitive Intelligence Practitioners: A Comparison Between the Public and Private Sectors. Proc. of the Annual Conf. of the Society of Competitive Intelligence Professionals (SCIP).

Absar, R., & Whitesides, S. (2006, August). On Computing Shortest External Watchman Routes for Convex Polygons. Proceedings of the 18th Canadian Conference on Computational Geometry (CCCG 2006).

Guastavino, C., & Dubois, D. (2006, September). Ecological explorations of soundscapes: From verbal analysis to experimental settings. Invited Paper at the 4th Joint Meeting of the Acoustical Society of America and the Acoustical Society of Japan.

Guastavino, C., & Dubois, D. (2006, May). The ideal urban soundscape: investigating the sound quality of French cities. Proceedings of Euronoise 2006.

Teboul, E. J. (2024). All Patched Up: a Material and Discursive History of Modularity and Control Voltages. In E. J. Teboul, A. Kitzmann, & E. Engstrom (Eds.), Modular Synthesis: Patching Machines and People. Routledge. https://www.routledge.com/Modular-Synthesis-Patching-Machines-and-People/Teboul-Kitzmann-Engstrom/p/book/9781032113463

Teboul, E. J., Kitzmann, A., & Engstrom, E. (Eds.). (2024). Modular Synthesis: Patching Machines and People. Routledge. https://www.routledge.com/Modular-Synthesis-Patching-Machines-and-People/Teboul-Kitzmann-Engstrom/p/book/9781032113463

Evrard, A.-S., Avan, P., Cadène, A., Guastavino, C., Martin, R., & Mietlicki, F. (2023). Bruit. In M. Debia, P. Glorennec, J.-P. Gonzalez, I. Goupil-Sormany, & N. Noisel (Eds.), Manuel de santé environnementale. Fondements et pratiques. (pp. 737–768). Presses de l’EHESP.

Guastavino, C., Fraisse, V., D’Ambrosio, S., Legast, E., & Lavoie, M. (2022). Designing Sound Installations in Public Spaces: A Collaborative Research-Creation Approach. In Designing Interactions for Music and Sound (pp. 108–133). Focal Press.

Dubois, D., Cance, C., Coler, M., Paté, A., & Guastavino, C. (2021). Sensory Experiences: Exploring meaning and the senses. John Benjamins. https://doi.org/10.1075/celcr.24

Paté, A., Dubois, D., & Guastavino, C. (2021). Free sorting tasks for exploring sensory categories. In D. Dubois, C. Cance, M. Coler, A. Paté, & C. Guastavino (Eds.), Sensory experiences: Exploring meaning and the senses, John Benjamins Publishing. Benjamins.

Dubois, D., Cance, C., Coler, M., Paté, A., & Guastavino, C. (2021). Questioning sensory experiences. In D. Dubois, C. Cance, M. Coler, A. Paté, & C. Guastavino (Eds.), Sensory experiences: Exploring meaning and the senses, John Benjamins Publishing. Benjamins.

Dubois, D., Cance, C., Coler, M., Paté, A., & Guastavino, C. (2021). From perception to sensory experiences: a paradigm shift. In D. Dubois, C. Cance, M. Coler, A. Paté, & C. Guastavino (Eds.), Sensory experiences: Exploring meaning and the senses, John Benjamins Publishing. Benjamins.

Guastavino, C. (2021). Exploring soundscapes. In D. Dubois, C. Cance, M. Coler, A. Paté, & C. Guastavino (Eds.), Sensory experiences: Exploring meaning and the senses, John Benjamins Publishing (pp. 139–167). Benjamins.

Teboul, E. J. (2020). Bleep Listening. In N. Collins (Ed.), Handmade Electronic Music: The Art of Hardware Hacking (Third Edition (online content). Routledge. https://routledgetextbooks.com/textbooks/9780367210106/culture_history.php

Teboul, E. J. (2020). A Method for the Analysis of Handmade Electronic Music as the Basis of New Works [Dissertation, Rensselaer Polytechnic Institute]. https://archive.org/details/teboul-2020-a-method-for-the-analysis-of-handmade-electronic-music-as-the-basis-of-new-works

Teboul, E. J. (2020). Hacking Composition: Dialogues With Musical Machines. In M. Cobussen & M. Bull (Eds.), The Bloomsburry Handbook of Sonic Methodologies. Bloomsbury Academic. https://doi.org/10.5040/9781501338786.ch-052

Guastavino, C. (2018). Everyday Sound Categorization. In T. Virtanen, M. D. Plumbley, & D. Ellis (Eds.), Computational Analysis of Sound Scenes and Events (pp. 183–213). Springer International Publishing. https://doi.org/10.1007/978-3-319-63450-0_7

Teboul, E. J. (2018). Electronic Music Hardware and Open Design Methodologies for Post-Optimal Objects. In J. Sayers (Ed.), Making Things and Drawing Boundaries: Experiments in the Digital Humanities (pp. 177–184). University of Minnesota Press. https://dhdebates.gc.cuny.edu/read/untitled-aa1769f2-6c55-485a-81af-ea82cce86966/section/bcbaf93f-c2a5-4bb1-85f5-13da2c749b90

Bild, E., Steele, D., Pfeffer, K., Bertolini, L., & Guastavino, C. (2018). Activity as a mediator between users of public spaces and their interpretation of the auditory environment. Lessons from the Musikiosk soundscape intervention. In F. Aletta & J. Xiao (Eds.), Handbook of Research on Perception-driven approaches to urban assessment and design (pp. 100–125). IGI Global.

Boutard, G., & Guastavino, C. (2018). Cultural Heritage, Information Science, and the Creative Process. In N. Donin (Ed.), The Oxford Handbook of the Creative Process in Music (pp. 1–23). Oxford University Press. https://doi.org/10.1093/oxfordhb/9780190636197.013.33

Teboul, E. J. (2017). The Transgressive Practices of Silicon Luthiers. In E. Miranda (Ed.), Guide to Unconventional Computing for Music (pp. 85–120). Springer. https://link.springer.com/chapter/10.1007/978-3-319-49881-2_4

Botteldooren, D., Andringa, T., Aspuru, I., Brown, A. L., Dubois, D., Guastavino, C., Kang, J., Lavandier, C., Nilsson, M., Preis, A., & Schulte-Fortkamp, B. (2016). From sonic environment to soundscape. In J. Kang & B. Schulte-Fortkamp (Eds.), Soundscape and the built environment (pp. 17–42). CRC Press.

Teboul, E. J. (2015). Silicon Luthiers: Contemporary Practices in Electronic Music Hardware [Master's thesis, Dartmouth College]. https://redthunderaudio.com/mastersthesis

Frissen, I., Campos, J. L., Sreenivasa, M., & Ernst, M. O. (2013). Enabling unconstrained omnidirectional walking through virtual environments: an overview of the CyberWalk project. In Human Walking in Virtual Environments (pp. 113–144). Springer New York. http://link.springer.com/chapter/10.1007/978-1-4419-8432-6_6

Ziat, M., Frissen, I., Campion, G., Hayward, V., & Guastavino, C. (2013). Plucked string stiffness affects loudness perception. In I. Oakley & S. Brewster (Eds.), Haptic and Audio Interaction Design (Vol. 7989, pp. 79–88). Springer.

Botteldooren, D., Aspuru, I., Brown, L., Dubois, D., Guastavino, C., Lavandier, C., Nilsson, M., & Preis, A. (2013). Understanding and Exchanging. In J. Kang, K. Chourmouziadou, K. Sakantamis, B. Wang, & Y. Hao (Eds.), Soundscape of European Cities and Landscape (pp. 36–43). Oxford, UK: Soundscape-COST.

Murphy, E., Moussette, C., Verron, C., & Guastavino, C. (2012). Supporting Sounds: Design and Evaluation of an Audio-Haptic Interface. In D. Hutchison, T. Kanade, J. Kittler, J. M. Kleinberg, F. Mattern, J. C. Mitchell, M. Naor, O. Nierstrasz, C. Pandu Rangan, B. Steffen, M. Sudan, D. Terzopoulos, D. Tygar, M. Y. Vardi, G. Weikum, C. Magnusson, D. Szymczak, & S. Brewster (Eds.), Haptic and Audio Interaction Design (Vol. 7468, pp. 11–20). Springer Berlin Heidelberg. http://link.springer.com/chapter/10.1007%2F978-3-642-32796-4_2#

Absar, R., & Guastavino, C. (2011). Non-speech sound design for a hierarchical information system. In M. Kuroso (Ed.), Human Centered Design, HCI 2011 (Springer Verlag, Vol. 6776, pp. 461–470).

Frissen, I., Katz, B., & Guastavino, C. (2010). Effect of Sound Source Stimuli on the Perception of Reverberation in Large Volumes. In S. Ystad, M. Aramaki, R. Kronland-Martinet, & K. Jensen (Eds.), CMMR/ICAD 2009 (Springer Verlag, Vol. 5954, pp. 358–376).

Guastavino, C. (2009). Validité écologique des dispositifs expérimentaux. In D. Dubois (Ed.), Le Sentir et le Dire. Concepts et méthodes en psychologie et linguistique cognitives (pp. 229–248). L’Harmattan (Coll. Sciences Cognitives).

Beheshti, J., Large, A., & Julien, C. A. (2008). Designing a Virtual Reality Interface for Children’s Web Portals. In P. Rao & S. A. Zodgekar (Eds.), Virtual Reality: Concepts and Applications (pp. 193–208). The Icfai University Press.